Bhashini ASR

4 minute read

Advanced

Bhashini is a project or initiative aimed at providing easy access to the Internet and digital services for all Indians in their native languages. The primary goal of the project is to increase the amount of content available in Indian languages, thereby promoting digital inclusivity and accessibility for a broader population

Bhashini in Glific

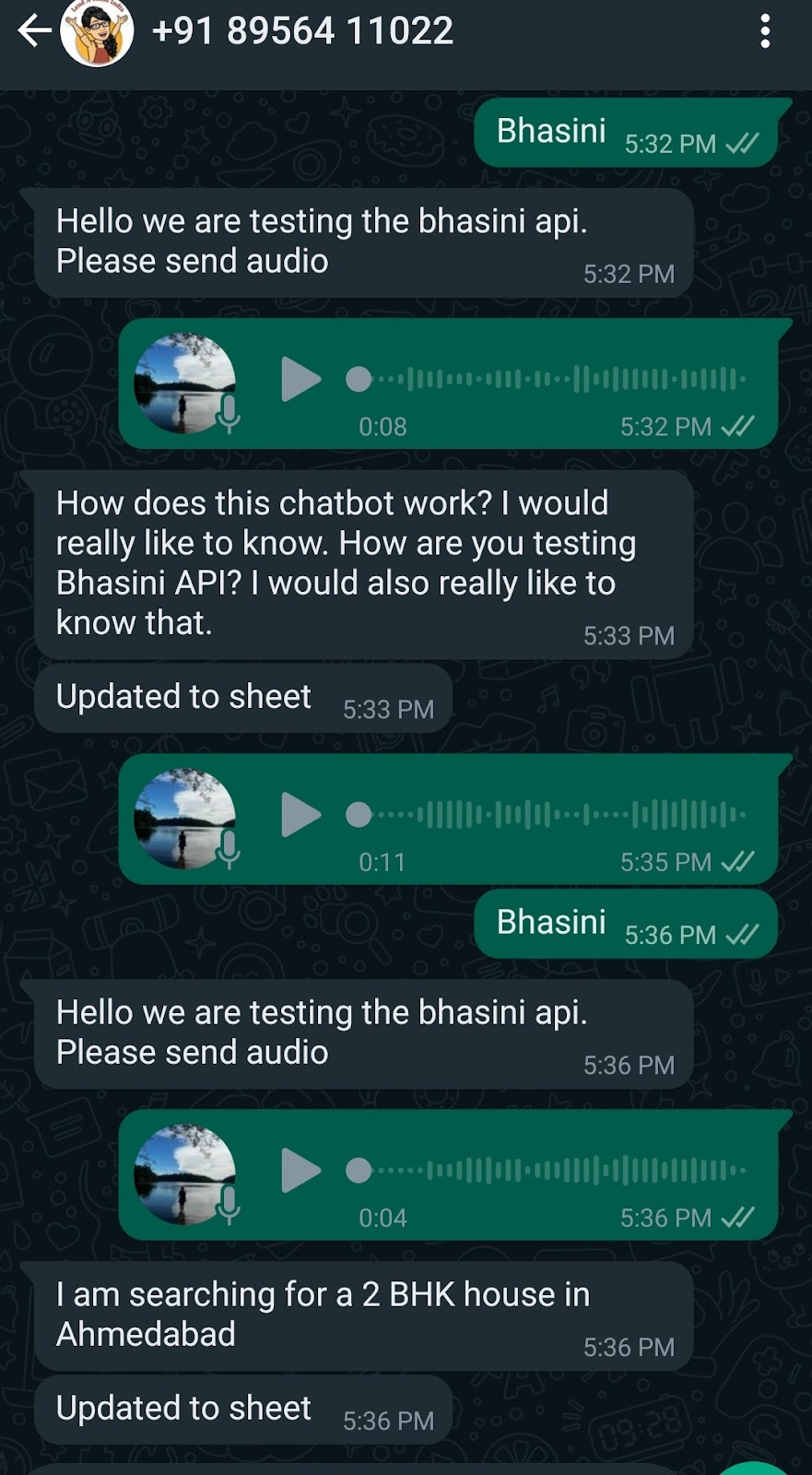

- Bhashini APIs are used in Glific for speech recognition

- Whatsapp user can share an audio file, which could be converted to text (in the language chosen by the user)

- The converted text could be stored in Google Sheets, used in flows/ used as per the use case

Sample Chat

Steps to integrate Bhashini ASR in Glific flows

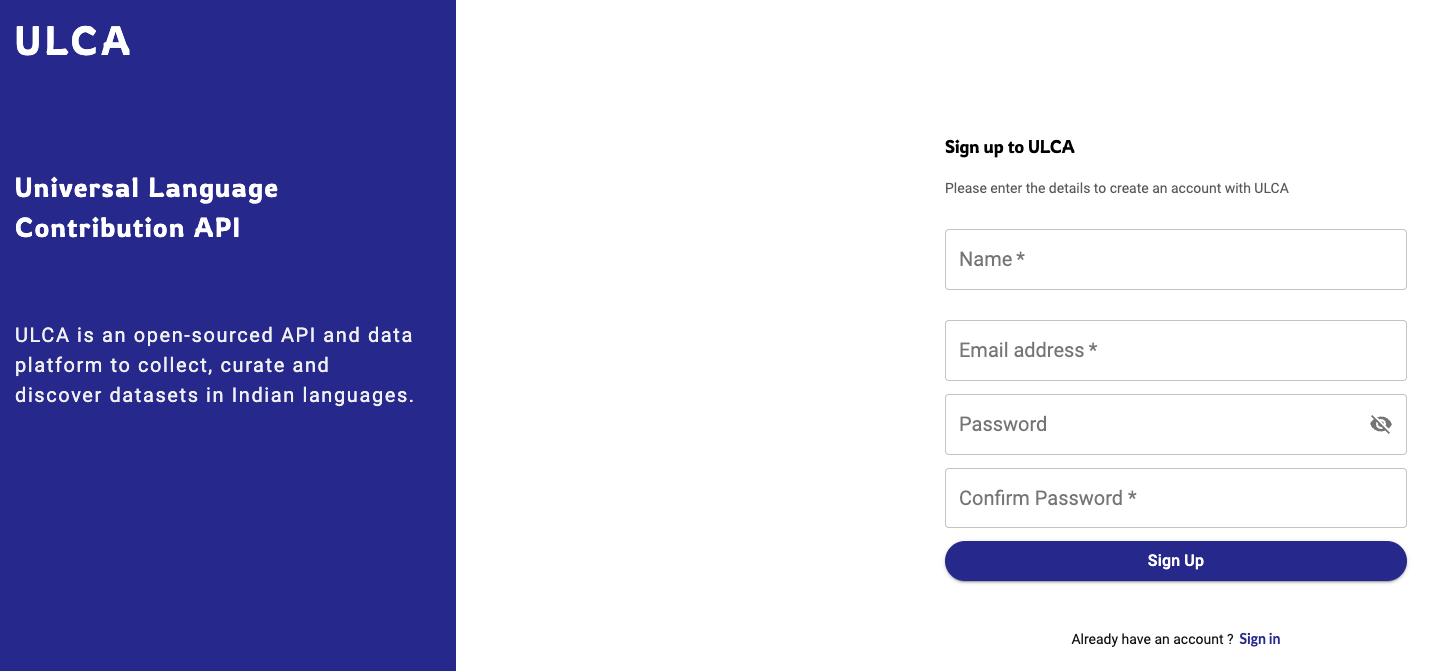

Create an account in Bhashini website using name & email ID. Please verify the account using the email received.

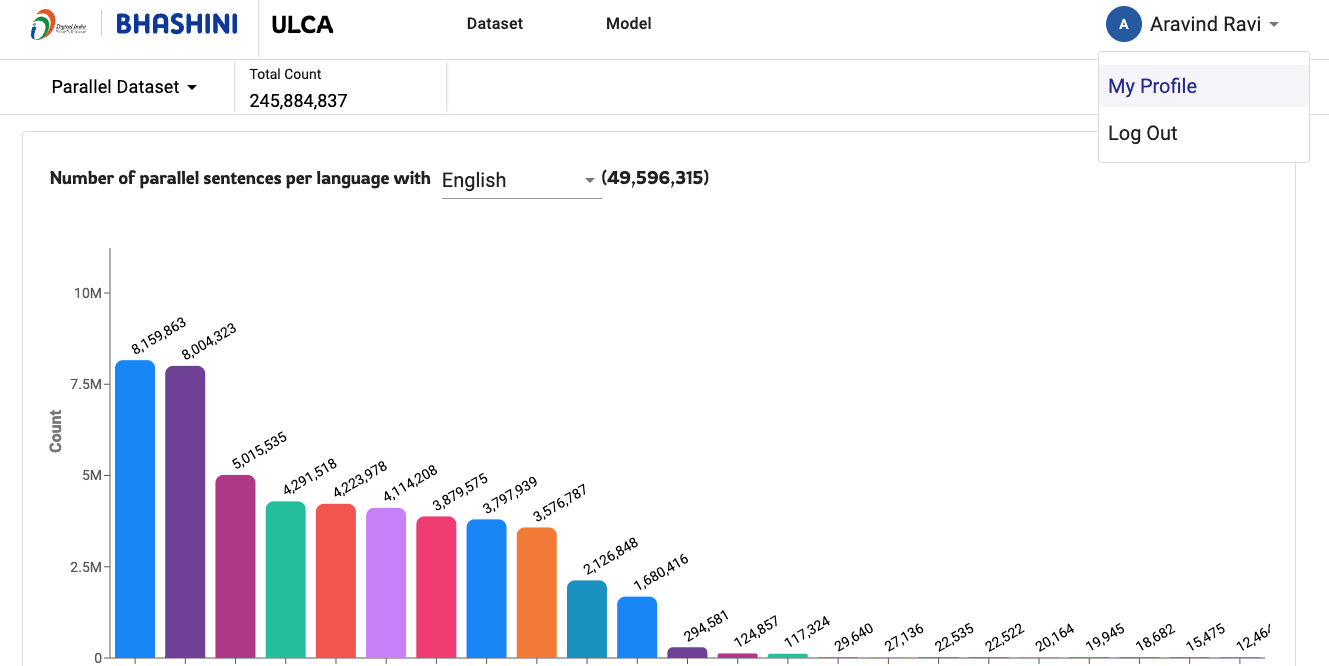

Go to ‘My Profile’ on the top-right corner.

Generate ‘ULCA API Key’ (top-right corner). Also make note of the User ID on top of the button. This will be used inside the webhook call in Glific flow.

Make note of the API Key generated. This is required inside the webhook call used in the Glific flow for calling the Bhashini API. (Starting with 01af.. here)

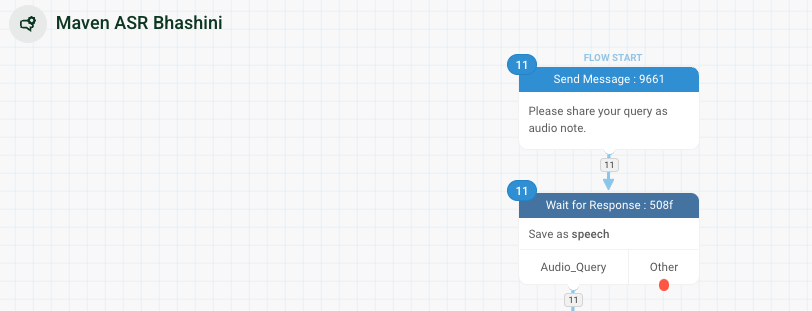

Now login to your Glific instance and create a flow. In this example we’ll use a flow called Maven ASR Bhashini, used for recording incoming audio queries in a Google Sheet. The flow starts with asking the user to share the query as a voice note and it is saved as ‘speech’.

The download link for a few sample flows are given below to test it for yourself.

- After the audio is captured, the Bhashini ASR API is called using a Webhook function called ‘speech_to_text_with_bhasini’ and the response is stored as ‘speech2text’.

- The function name is pre-defined, you should always use the name ‘speech_to_text_with_bhasini’ to call the Bhashini API

- The response stored (speech2text here) could be given any name of your liking, just like any other flow variable

- The webhook body is shown below. Please update the parameters as shown :

- speech : It should be updated with the result name given for the audio file captured. Here, it is saved as ‘speech’ (Step 5), hence the value is @results.speech.input (If the audio note captured was saved as ‘query’, then the value will be @results.query.input)

- userID : The userID is updated with the userID captured from Bhashini website. (Refer Step 3)

- ulcaApiKey : The API Key needs to be updated with the ULCA API key captured from Bhashini website. (Refer Step 4)

- pipelineId : Keep the value as given in the screenshot below - “64392f96daac500b55c543cd”

- base_url : Keep the value as given in the screenshot below - “https://meity-auth.ulcacontrib.org/ulca/apis/v0/model/”

- contact : Keep the value as given in the screenshot below - “@contact” You can read more about the variables used inside the webhook body/ Bhashini APIs here

- Once the webhook is updated, you could always refer to the translated text as ‘@results.speech2text.asr_response_text’ to use it inside the flow.

The output of the text response from the Bhashini depends on the language preference of the user. For instance if a user has selected Hindi language, the response from Glific will be in Hindi script.

- You could additionally link the webhook to a ‘Link Google Sheet’ node to record the translated text into a spreadsheet as shown in the flow below.

The Google Sheet node is configured as shown below. The fourth variable being used is ‘@results.speech2text.asr_response_text’ which will capture the translated text in the fourth column.

The incoming audio notes in this flow will be captured in Google Sheets as shown below

Sample Flow Links

Test some sample flows for yourself by importing these flows to your Glific instance.